NVIDIA has introduced a range of new products and updates aimed at enhancing generative AI performance on desktops and laptops. These include the GeForce RTX SUPER series of desktop GPUs, generative AI-enabled laptops from leading manufacturers, and a suite of new AI software and tools for both developers and consumers.

The GeForce RTX SUPER series, announced at CES, comprises the RTX 4080 SUPER, 4070 Ti SUPER, and 4070 SUPER graphics cards. These are designed to deliver top AI performance, with the RTX 4080 SUPER being capable of generating AI video 1.5 times faster and images 1.7 times faster than the previous RTX 3080 Ti GPU. The Tensor Cores in these GPUs are capable of up to 836 trillion operations per second.

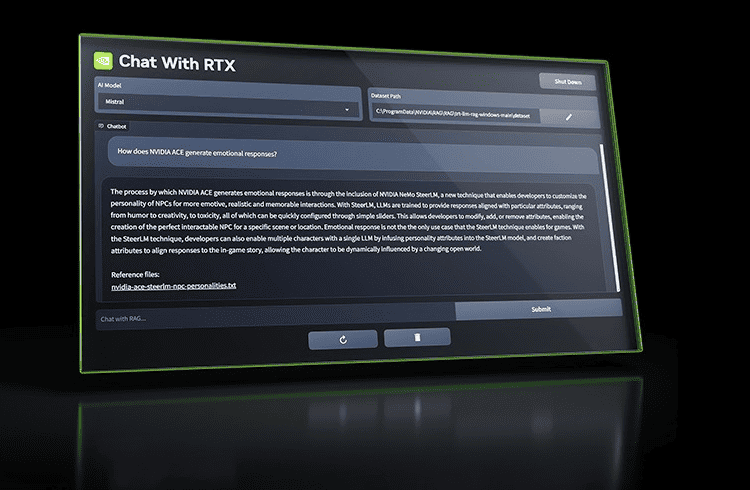

This allowed NVIDIA to release a tech demo called Chat with RTX, which enables users to interact with their data through AI large language models. This will be available as an open-source project as well.

Why is this so important? This is one of the first mainstream ways to run a LLM locally that most “ordinary folks” can do on “ordinary” computer hardware. This is paving the way for more and more LLM services to be able to ran locally, which can eventually lead to a pure local solution for your smart home where it can actually have a LLM backing it’s understanding of what you are doing.

For more detailed information about NVIDIA’s Chat with RTX, check out their website here.